About Me

Hi there, I am Kevin. Currently, I am an AI researcher at TikTok focusing on vision language models(VLM), video generation, and unified visual understanding and generation. I helped build Seaweed, a foundation model for video generation.

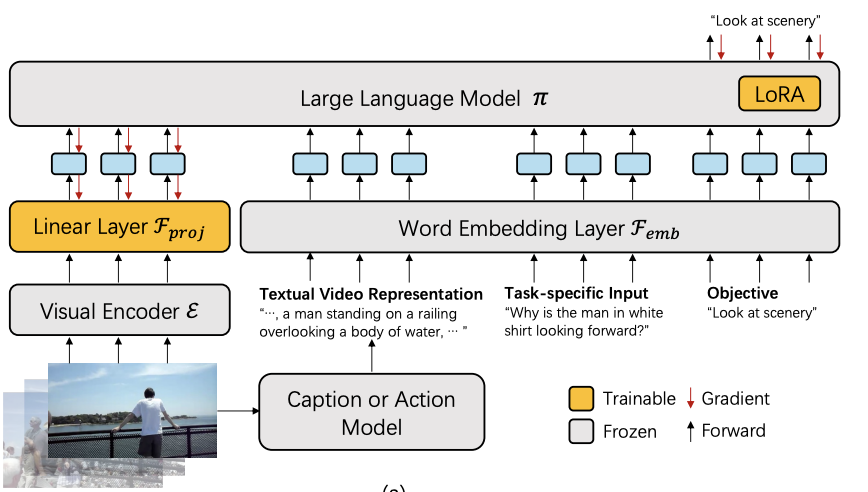

Previously, I obtained my Master of Science degree at Brown University, where I was fortunate to collaborate closely with professor Chen Sun and his group on video understanding. I have also worked with professor George Konidaris and his PhD student Haotian Fu on embodied agents. I obtained my Bachelor of Science degree at NYU.

I grew up in Shanghai until I went to high school in Scottsdale, AZ.

Selected Projects

Synthetic Video Enhances Physical Fidelity in Video Synthesis

Qi Zhao, Xingyu Ni, Ziyu Wang, Feng Cheng, Ziyan Yang, Lu Jiang*, Bohan Wang*

ICCV 2025

Contact Me

Email: qi_zhao [at] alumni [dot] brown [dot] edu